January 9, 2026, 02:20 UTC. Anthropic flipped a switch.

Third-party tools using Claude Pro/Max subscriptions stopped working. No warning. No migration path. Just a new error message: “This credential is only authorized for use with Claude Code.”

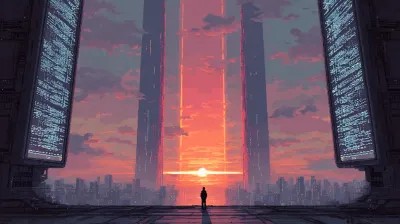

The walled garden is closing.

What Happened

Anthropic deployed “strict new technical safeguards” blocking subscription OAuth tokens from working outside their official Claude Code CLI. Tools like OpenCode had been spoofing the Claude Code client identity, sending headers that made Anthropic’s servers think requests came from the official tool.

That stopped working overnight.

— Thariq Shihipar, AnthropicYesterday we tightened our safeguards against spoofing the Claude Code harness after accounts were banned for triggering abuse filters from third-party harnesses.

Within hours, GitHub issues flooded in. 147+ reactions on the main thread. 245+ points on Hacker News. Developers paying $100-200/month discovered their workflows were broken.

Who Got Hit

- OpenCode users: The 56k-star open source Claude Code alternative. Dead in the water.

- xAI employees: Cut off from Claude via Cursor. Tony Wu told staff: “We will get a hit on productivity, but it rly pushes us to develop our own coding product / models.”

- OpenAI employees: Already blocked in August 2025 for using Claude to benchmark GPT-5.

- Anyone using subscription OAuth outside Claude Code: If you weren’t using the official CLI, you got locked out.

Standard API keys still function. OpenRouter integration still works. The block specifically targets subscription OAuth tokens being used in third-party harnesses. If you’re paying per-token through the API, you’re unaffected.

The Economic Reality

The crackdown makes sense when you follow the money.

$200/month Max subscription gives you unlimited tokens through Claude Code. The same usage via API would cost $1,000+ per month for heavy users. Third-party tools removed Claude Code’s artificial speed limits, enabling the kind of autonomous loops I wrote about in Ralph Wiggum.

— HN user dfabulichIn a month of Claude Code, it’s easy to use so many LLM tokens that it would have cost you more than $1,000 if you’d paid via the API.

This was subscription arbitrage. Pay consumer prices, get enterprise-grade throughput. Run overnight agent loops that would be cost-prohibitive on metered billing.

Anthropic closed the loophole.

The Ralph Wiggum Connection

The timing isn’t coincidental. The Ralph Wiggum technique went viral in late December. Autonomous loops where Claude works for hours without intervention. YC hackathon teams shipping 6+ repos overnight for $297 in API costs.

Those economics only work on a flat-rate subscription. When you’re burning through tokens at 3am while you sleep, metered billing destroys the ROI.

Third-party tools like OpenCode made Ralph Wiggum loops easier to run. Better UI, better logging, better control. The technique exploded. Anthropic’s infrastructure costs exploded with it.

Anthropic ships the Ralph Wiggum plugin officially. They want you running autonomous loops. Just not in someone else’s harness.

The Backlash

— DHH (creator of Rails)Seems very customer hostile.

Users who’d invested in OpenCode workflows found themselves locked out mid-project:

— @Naomarik on GitHubUsing CC is like going back to stone age. I immediately downgraded my $200/month Max subscription, then canceled entirely because it was unusable for the workflows I have.

The HN thread split predictably. Some called it “an unusual L for Anthropic” and noted OpenCode’s engineering is ahead of Claude Code. Others argued OpenCode “brought this on themselves” by violating ToS from the start.

Both are true. ToS violations don’t justify zero-notice lockouts. Zero-notice lockouts don’t make ToS violations acceptable.

OpenCode’s Response

Within hours of the block, OpenCode shipped alternatives:

- Workaround merged: PR #14 changed the tool prefix from

oc_tomcp_, bypassing the initial detection - ChatGPT Plus/Pro support: v1.1.11 added

/connectfor OpenAI OAuth - Codex integration: Working with OpenAI on subscription parity

- OpenCode Black: New $200/month tier routing through enterprise API gateway

The message from dax was clear: if Anthropic doesn’t want OpenCode users, OpenAI does.

If you built workflows around claude-launcher or OpenRouter integration, you’re already insulated. The models from my open source agentic moment post: GLM-4.7, MiniMax M2.1. MIT licensed. No vendor lock-in.

The Bigger Picture

This isn’t just about OpenCode. Anthropic also cut off:

- xAI via Cursor: Competitors can’t use Claude to build competing products

- OpenAI (August 2025): Blocked for benchmarking GPT-5 with Claude

The pattern is consolidation. Anthropic wants you in their ecosystem, using their tools, on their terms. The open source models targeting Claude Code compatibility suddenly look more strategic.

— Jesse Hanley on XThe biggest L in the whole Anthropic vs OpenCode situation is that they’ve just radicalised the entire anomalyco team and the supporting OSS community. Models are not sticky and this action is an admission to that.

What This Means

Three takeaways:

-

Subscription arbitrage is over: If you were using third-party tools to get unlimited Claude at consumer prices, that window closed. Pay API rates or use official tooling.

-

Vendor lock-in is real: I’ve written about Claude Code’s guardrails, hooks, and plugin ecosystem. Great features. Also great lock-in. When the vendor decides to restrict access, you’re at their mercy.

-

Diversify your model dependencies: The open source agentic moment matters more now. GLM-4.7 at 7x cheaper with MIT licensing. MiniMax M2.1 at 10x cheaper. No vendor can flip a switch and break your workflow.

I still use Claude Code daily. Still think it’s the best agentic coding tool available. But “best” doesn’t mean “trustworthy vendor behavior.” Denial, then admission applies to business practices too.

The era of unlimited Claude through clever workarounds is over. Anthropic is building a walled garden. You can stay inside and accept the constraints, or you can start building exits.

I’m building exits.