The Problem

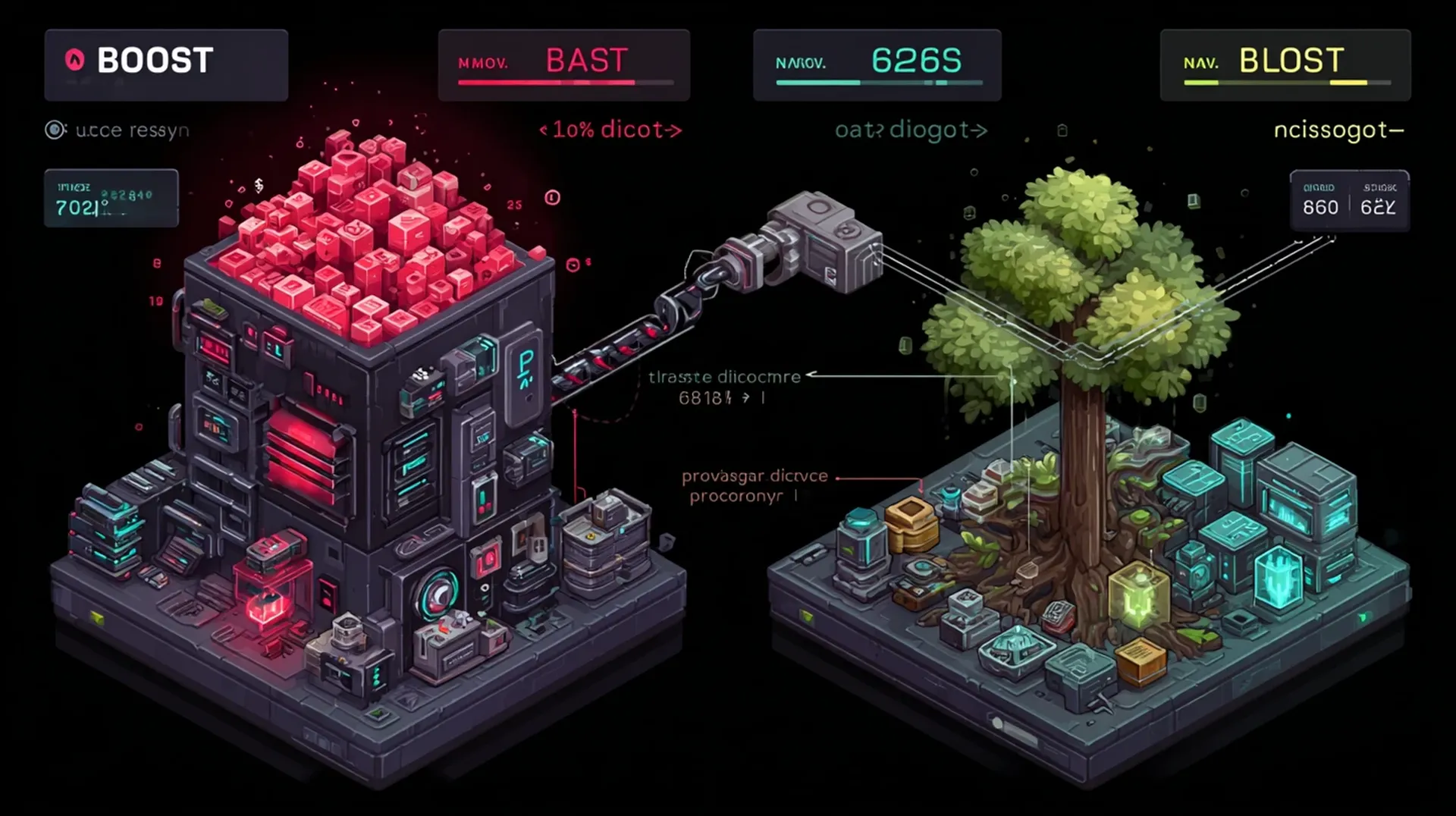

Anthropic documented it in their code execution with MCP post: loading all MCP tool definitions upfront burns excessive context tokens. Three database servers? 28,700 tokens before doing anything. Tool definition overload makes multi-MCP projects expensive.

Their progressive discovery pattern solved it: 98.7% token reduction by exploring tools on-demand through a filesystem API. LLMs write code well - express MCPs as code APIs, not direct tool calls.

So I built it. Chrome DevTools MCP burned 17.5k tokens, I implemented progressive discovery, got 96% reduction. Then production tested with a real project: 4 database MCPs across different environments replaced with generated Skills. ~25k token overhead eliminated.

Database MCPs worked exactly as expected: SQL in, data out, zero friction. Chrome DevTools? UIDs instead of CSS selectors, fragile pattern matching, manual timing. The pattern was universal, but some are better to work with than others.

Users wanted Skills integration. Generated descriptions from metadata (database names yes, passwords no). Built deduplication: dev vs prod MCPs with identical tools generated shared wrappers once, unique Skills pointing to shared code.

Token savings real. Production tested. But here’s the catch.

I just wrote about Skills’ controllability problems: LLM reasoning triggers them, you can’t explicitly invoke, no deterministic control. So why use them here?

This use case fits the design: Skills wrapping MCP tools should be semantic and context-driven. You want Claude to reason about which database or tool to use based on task context. “Update the dev database” should trigger the dev Skill. “Check prod logs” should trigger prod. NLP-based invocation is appropriate when the decision requires understanding intent.

The controllability problem exists for workflow orchestration: multi-step processes where you need explicit sequencing. But for tool selection? Semantic triggering works. The key is matching the pattern to the problem.

What It Does

The tool queries MCP servers via protocol, generates TypeScript wrappers with progressive discovery, and creates Claude Code Skills. It deduplicates shared tools (dev vs prod databases), generates semantic descriptions from metadata, and hides credentials while exposing safe context (database names, not passwords). Loading 3 MCPs directly burns 28,700 tokens; with progressive discovery: ~750 tokens. 97% savings.

Works with databases (Postgres, MySQL, MSSQL, MongoDB, Redis), Chrome DevTools, filesystem, git, any MCP server. But here’s what I learned testing it in production.

Try It

npx mcp-code-wrapper . # Current directory

npx mcp-code-wrapper /path/to/project # Specific project

npx mcp-code-wrapper --global # Global ~/.claude/ MCPs

claude -c # Restart to load SkillsThe Reality

This isn’t the ideal solution, but it claws back precious context when you need it.

Database MCPs (the good case): Production project ran 4 database servers across environments. 25k tokens upfront just for definitions. Progressive discovery dropped that to ~750 tokens. SQL in, data out. Mental model matches expectations. In this scenario, the wrapper works.

Chrome DevTools MCP (the bad case): Works, but fundamentally awkward. Uses UIDs from accessibility tree instead of CSS selectors. You can’t click('#login-button') - you take snapshots, parse text for element UIDs, then use ephemeral IDs. Pattern matching fragile. Timing requires manual setTimeout(). Parameter naming inconsistent (value vs text, uid vs selector). Even with helper utilities, browser automation remains brittle. The wrapper can’t fix bad API design.

The wrapper tool itself: Token savings are real (97% reduction). Progressive discovery works. Deduplication handles dev/prod configs. But both Chrome and database MCPs share the same underlying issue: you’re adding a protocol layer between your code and libraries that already work better.

First Principles

After building this tool and testing it with real MCPs, the pattern that emerged is clear: write libraries, not MCPs.

The Token Cost Trap

“But replacing MCPs saves tokens!” Yes, it does. 28,700 tokens → 750 tokens is real. Production project: 25k token overhead eliminated by wrapping 4 database MCPs across environments. These savings matter when you’re context-constrained and already committed to MCPs.

But you’re solving the wrong problem. You’re paying context cost because Claude loads all tool definitions upfront. The solution isn’t wrapping MCPs - it’s not using MCPs in the first place.

Native TypeScript libraries:

- Zero token overhead (no tool definitions to load)

- Full type safety and autocomplete

- Direct access to library features

- No protocol translation layer

- No wrapper generation needed

You’re trading 750 tokens of progressive discovery overhead for 0 tokens of native code.

The pragmatic middle ground: If you’re already using MCPs (multi-database dev environments, external services you can’t control), this wrapper claws back ~97% of context cost. But if you’re starting fresh? Write libraries.

Browser Automation

Chrome DevTools MCP approach:

const snapshot = await take_snapshot();

const uid = findUidByPattern(snapshot.content[0].text, [['button', 'login']]);

await click({ uid });Native Playwright:

import { chromium } from 'playwright';

const page = await browser.newPage();

await page.goto('https://example.com');

await page.click('#login-button'); // Just worksPlaywright gives you CSS selectors, proper waits, screenshot capabilities, network interception, and a battle-tested API. Chrome DevTools MCP gives you UID hunting and manual timing. The choice is obvious.

Database Operations

Database MCP approach:

import { read_data } from './.mcp-wrappers/mssql/read_data.ts';

const result = await read_data({ query: 'SELECT * FROM users WHERE active = 1' });Native client:

import { Pool } from 'pg';

const pool = new Pool({ connectionString: process.env.DATABASE_URL });

const result = await pool.query('SELECT * FROM users WHERE active = true');The MCP wrapper works, but you’re adding a protocol layer for no benefit. The native client is simpler, faster, and gives you connection pooling, transactions, and better error handling out of the box.

When MCPs Actually Make Sense

There are legitimate use cases:

- External services you don’t control - Filesystem operations, git servers, remote APIs

- Standardized interfaces - Same protocol across different implementations

- Security isolation - Tools that need sandboxing or permission boundaries

- Cross-language integration - Python tools in Node.js projects

When They Don’t

Don’t wrap existing npm packages:

- Browser automation? Use Playwright, not Chrome DevTools MCP

- Database queries? Use native clients (pg, mysql2, mongodb), not database MCPs

- HTTP requests? Use fetch or axios, not HTTP MCPs

- File operations? Use fs/promises, not filesystem MCPs (unless you need sandboxing)

If you control both the tool and the code calling it, and a native library exists, use the library. The abstraction layer adds complexity without benefit.

The Bottom Line

Progressive discovery works. Token savings are real (97% reduction, production-tested with 4-database project). Skills integration handles deduplication. This tool is a pragmatic solution when you’re already committed to MCPs.

But it can’t fix bad API design (Chrome DevTools), and it’s still adding protocol overhead you don’t need. The ideal pattern is simpler: write TypeScript libraries Claude imports directly.

Zero overhead. Full type safety. No protocol layer. Just code.

The wrapper tool stays up as a demonstration of progressive discovery and Skills integration. Use it to claw back context if you’re already running multiple MCPs. But for new projects? Start with libraries. Only reach for MCPs when you genuinely need the protocol layer.